The NeuThrone sunglasses leverage technologies that exploit vulnerabilities in AI tools such as StableDiffusion by introducing visual noise - a sort of “deepfake camouflage” - that confuses, and cripple their ability to copy and reproduce images, tracing their origins to techniques like CV Dazzle, Fawkes, and Nightshade.

The sunglasses contain a small amount of visual noise that - although generally unnoticeable by human eyes - contains superfluous noise that distract diffusion models into thinking is important information about the object in question. As a result, when photographs of people wearing the sunglasses are fed into diffusion model training sets, the resulting models fail to faithfully reproduce the image.

The way StableDiffusion training works is that a user would gather a data set of 10 or so images that they wish to train StableDiffusion to produce. The images could be of polar bears, of Van Gogh paintings, or of a specific human being. Then, a user would use a program such as Koyha or Civit to train what is referred to as a “LoRA” or a “checkpoint”, essentially a brand new version of StableDiffusion that is specifically designed for creating images of polar bears, Von Gogh paintings, or of a specific human being.

The user would also feed the training programs a set of “regularization images” which are generic images that describe the parent category of the thing trying to be reproduced (think: animals in general, paintings in general, or humans in general). This gives the LoRA some understanding of the general domain its operating in, and from which it can draw other knowledge if it needs it.

These LoRA’s can be bought, sold, traded, downloaded and used in other users’ StableDiffusion environments.

Once the LoRA is installed, prompts for the desired objects are very effective at reproducing the intended object.

Users can leverage the LoRAs’ abilities in any way they dream up, limited only by their prompting skills, and their ability to further fine tune the diffusion models.

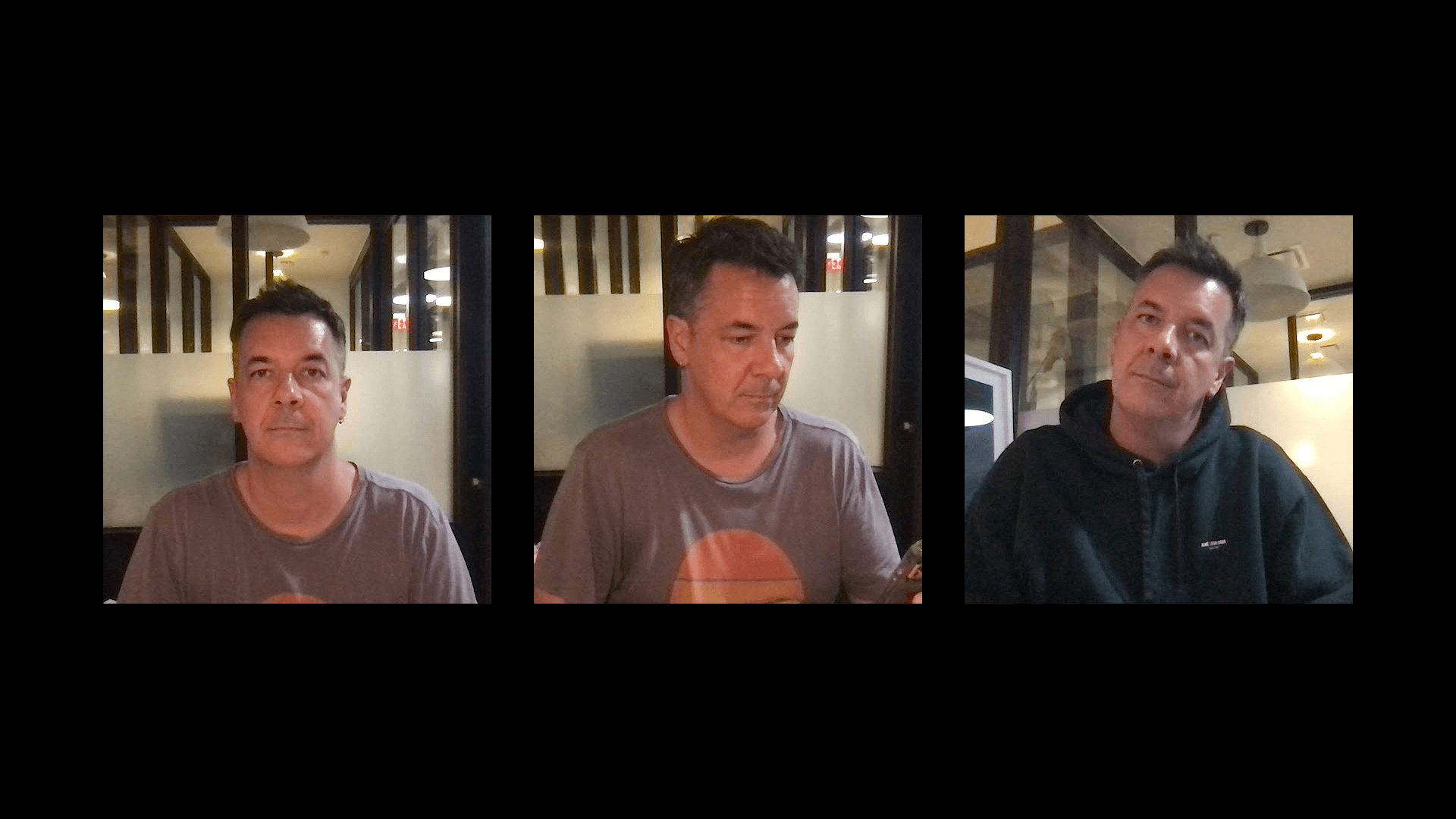

However when a user tries to train a StableDiffusion LoRA on a set of images that contain the “deepfake camouflage” - such as an image of someone wearing the deepfake camouflage sunglasses, the performance of the LoRA’s degrade considerably, either producing glitchy unusable images, or defaulting to images of the parent category.